Um comparativo entre a utilização de redes neurais perceptron e redes neurais profundas na identificação de radionuclídeos em espectrometria gama

DOI:

https://doi.org/10.15392/bjrs.v8i1.1132Palabras clave:

redes neurais, redes neurais profundas, caracterização, espectrometria gamaResumen

Apresentamos os resultados da comparação entre uma Rede Neural Profunda e uma Rede Neural Perceptron na classificação de espectros gama obtidos utilizando um detector de germânio hiper-puro. Utilizando dados de diversas fontes seladas (Am-241, Ba-133, Cd-109, Co-57, Co-60, Cs-137, Eu-152, Mn-54, Na-24, and Pb-210) foram gerados uma lista extensa de espectros para treino e validação contendo, respectivamente, 500 e 160 espectros, onde foram mesclados até três radionuclídeos em um único espectro. Depois de 250 épocas de treino foram validadas a acurácia de cada um dos modelos utilizando o conjunto de validação. O modelo de rede neural profunda obteve uma acurácia de classificação de 96,25% enquanto a rede neural perceptron obteve uma acurácia de 80,62%. Os resultados mostram um desempenho robusto e consistentemente melhor das redes neurais profundas, frente as redes neurais perceptron.

Descargas

Referencias

INTERNATIONAL ATOMIC ENERGY AGENCY - IAEA. Radioactive waste management glossary, Vienna, 2003.

McCulloch, Warren S., and Walter Pitts. "A logical calculus of the ideas immanent in nervous activity." The bulletin of mathematical biophysics 5.4 (1943): 115-133.

Rosenblatt, Frank. "The perceptron: A probabilistic model for information storage and organization in the brain." Psychological review 65.6 (1958): 386.

Ciresan, Dan, et al. "Deep neural networks segment neuronal membranes in electron microscopy images." Advances in neural information processing systems. 2012.

Ciregan, Dan, Ueli Meier, and Jürgen Schmidhuber. "Multi-column deep neural networks for image classification." Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 2012.

Hinton, Geoffrey, et al. "Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups." IEEE Signal Processing Magazine 29.6 (2012): 82-97.

Wu, Yonghui, et al. "Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation." arXiv preprint arXiv:1609.08144 (2016).

Zhang, Richard, Phillip Isola, and Alexei A. Efros. "Colorful image colorization." European Conference on Computer Vision. Springer International Publishing, 2016.

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks." Advances in neural information processing systems. 2012.

KELLER, Paul E. et al. Nuclear spectral analysis via artificial neural networks for waste handling. IEEE transactions on nuclear science, v. 42, n. 4, p. 709-715, 1995.

VIGNERON, Vincent et al. Statistical modelling of neural networks in γ-spectrometry. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, v. 369, n. 2-3, p. 642-647, 1996.

YOSHIDA, Eiji et al. Application of neural networks for the analysis of gamma-ray spectra measured with a Ge spectrometer. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, v. 484, n. 1, p. 557-563, 2002.

POTIENS, Junior; ADEMAR, Jose. Artificial neural network application in isotopic characterization of radioactive waste drums. 2005.

LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. "Deep learning." Nature 521.7553 (2015): 436-444.

SIMONYAN, Karen; ZISSERMAN, Andrew. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

BUITINCK, Lars et al. API design for machine learning software: experiences from the scikit-learn project. In: European Conference on Machine Learning and Principles and Practices of Knowledge Discovery in Databases. 2013.

CHOLLET, François et al. Keras. 2015.

Descargas

Publicado

Número

Sección

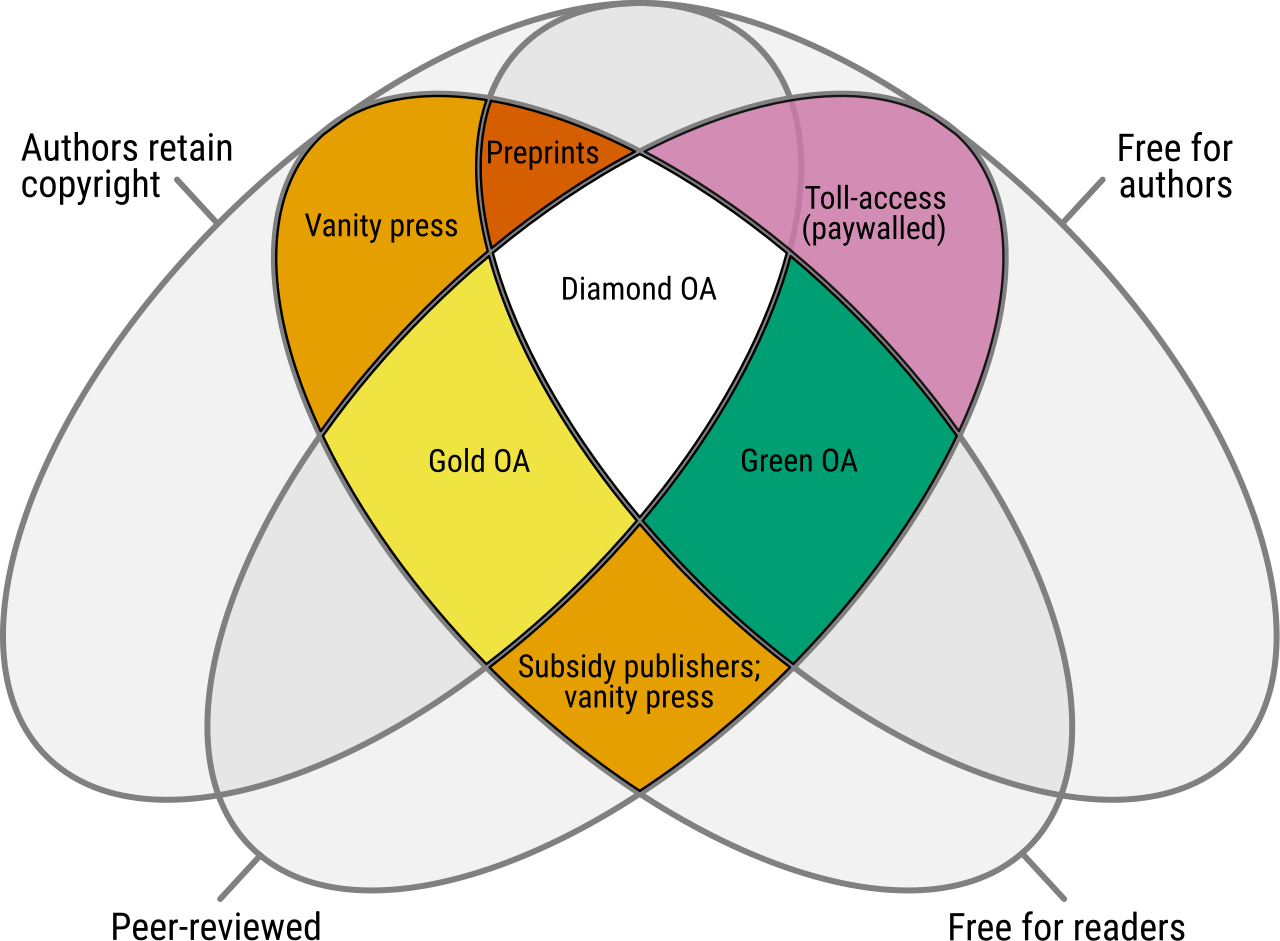

Licencia

Derechos de autor 2020 Brazilian Journal of Radiation Sciences (BJRS)

Esta obra está bajo una licencia internacional Creative Commons Atribución 4.0.

Licencia: los artículos de BJRS tienen una licencia internacional Creative Commons Attribution 4.0, que permite el uso, el intercambio, la adaptación, la distribución y la reproducción en cualquier medio o formato, siempre que se otorgue el crédito correspondiente al autor o autores originales y a la fuente, proporcione un enlace a la licencia Creative Commons e indique si se realizaron cambios. Las imágenes u otros materiales de terceros en el artículo están incluidos en la licencia Creative Commons del artículo, a menos que se indique lo contrario en una línea de crédito al material. Si el material no está incluido en la licencia Creative Commons del artículo y su uso previsto no está permitido por la regulación legal o excede el uso permitido, el autor deberá obtener el permiso directamente del titular de los derechos de autor. Para ver una copia de esta licencia, visite http://creativecommons.org/licenses/by/4.0/