Um comparativo entre a utilização de redes neurais perceptron e redes neurais profundas na identificação de radionuclídeos em espectrometria gama

DOI:

https://doi.org/10.15392/bjrs.v8i1.1132Keywords:

redes neurais, redes neurais profundas, caracterização, espectrometria gamaAbstract

Apresentamos os resultados da comparação entre uma Rede Neural Profunda e uma Rede Neural Perceptron na classificação de espectros gama obtidos utilizando um detector de germânio hiper-puro. Utilizando dados de diversas fontes seladas (Am-241, Ba-133, Cd-109, Co-57, Co-60, Cs-137, Eu-152, Mn-54, Na-24, and Pb-210) foram gerados uma lista extensa de espectros para treino e validação contendo, respectivamente, 500 e 160 espectros, onde foram mesclados até três radionuclídeos em um único espectro. Depois de 250 épocas de treino foram validadas a acurácia de cada um dos modelos utilizando o conjunto de validação. O modelo de rede neural profunda obteve uma acurácia de classificação de 96,25% enquanto a rede neural perceptron obteve uma acurácia de 80,62%. Os resultados mostram um desempenho robusto e consistentemente melhor das redes neurais profundas, frente as redes neurais perceptron.

Downloads

References

INTERNATIONAL ATOMIC ENERGY AGENCY - IAEA. Radioactive waste management glossary, Vienna, 2003.

McCulloch, Warren S., and Walter Pitts. "A logical calculus of the ideas immanent in nervous activity." The bulletin of mathematical biophysics 5.4 (1943): 115-133.

Rosenblatt, Frank. "The perceptron: A probabilistic model for information storage and organization in the brain." Psychological review 65.6 (1958): 386.

Ciresan, Dan, et al. "Deep neural networks segment neuronal membranes in electron microscopy images." Advances in neural information processing systems. 2012.

Ciregan, Dan, Ueli Meier, and Jürgen Schmidhuber. "Multi-column deep neural networks for image classification." Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 2012.

Hinton, Geoffrey, et al. "Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups." IEEE Signal Processing Magazine 29.6 (2012): 82-97.

Wu, Yonghui, et al. "Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation." arXiv preprint arXiv:1609.08144 (2016).

Zhang, Richard, Phillip Isola, and Alexei A. Efros. "Colorful image colorization." European Conference on Computer Vision. Springer International Publishing, 2016.

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks." Advances in neural information processing systems. 2012.

KELLER, Paul E. et al. Nuclear spectral analysis via artificial neural networks for waste handling. IEEE transactions on nuclear science, v. 42, n. 4, p. 709-715, 1995.

VIGNERON, Vincent et al. Statistical modelling of neural networks in γ-spectrometry. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, v. 369, n. 2-3, p. 642-647, 1996.

YOSHIDA, Eiji et al. Application of neural networks for the analysis of gamma-ray spectra measured with a Ge spectrometer. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, v. 484, n. 1, p. 557-563, 2002.

POTIENS, Junior; ADEMAR, Jose. Artificial neural network application in isotopic characterization of radioactive waste drums. 2005.

LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. "Deep learning." Nature 521.7553 (2015): 436-444.

SIMONYAN, Karen; ZISSERMAN, Andrew. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

BUITINCK, Lars et al. API design for machine learning software: experiences from the scikit-learn project. In: European Conference on Machine Learning and Principles and Practices of Knowledge Discovery in Databases. 2013.

CHOLLET, François et al. Keras. 2015.

Published

Issue

Section

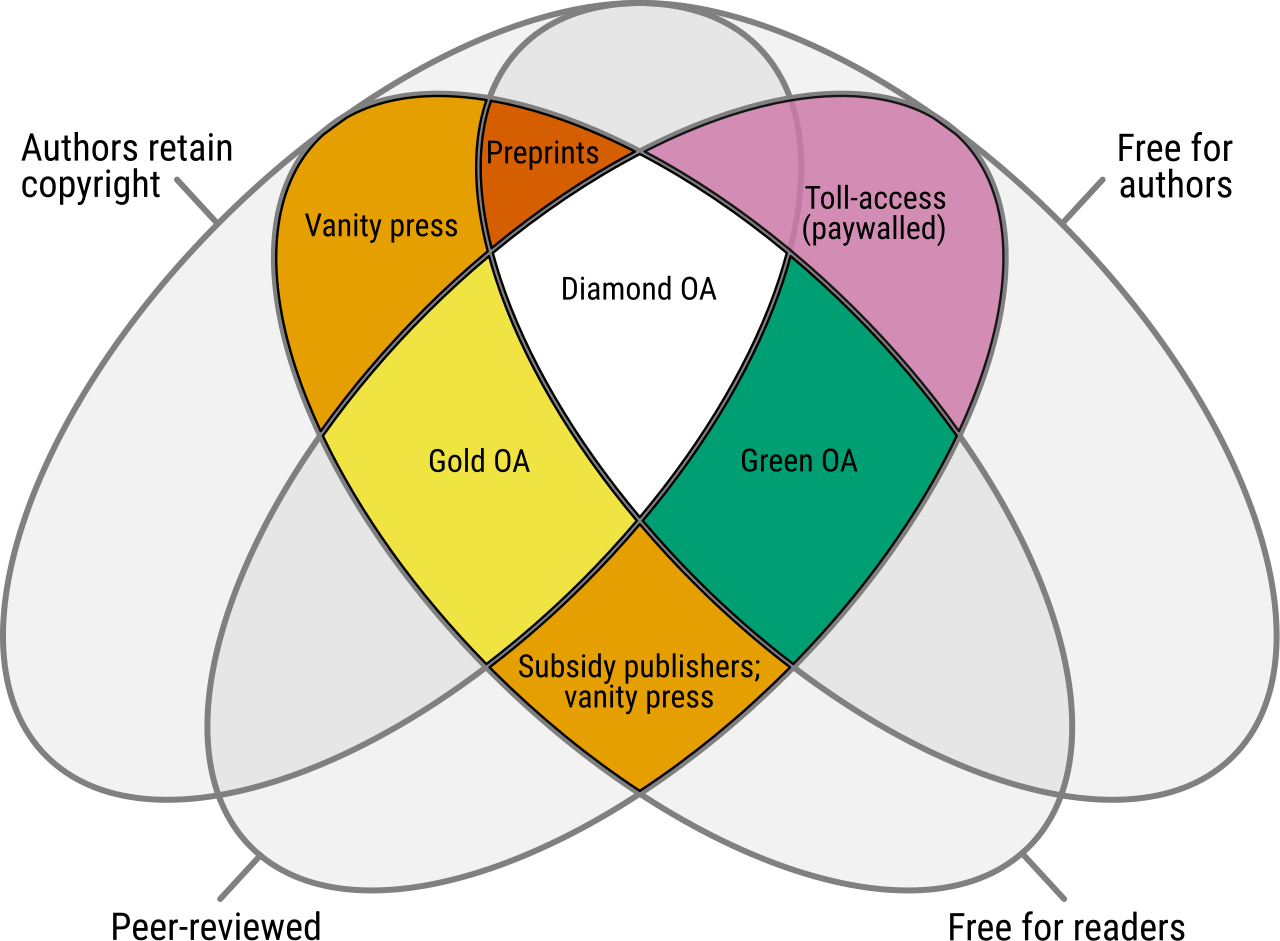

License

Copyright (c) 2020 Brazilian Journal of Radiation Sciences

This work is licensed under a Creative Commons Attribution 4.0 International License.

Licensing: The BJRS articles are licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/